We just got through reporting on the nuclear backlash to Wendy’s plan for AI-driven ‘dynamic pricing’. Now, we hear that a major Canadian University has pulled vending machines from its campus. Because the machines were spying on students!

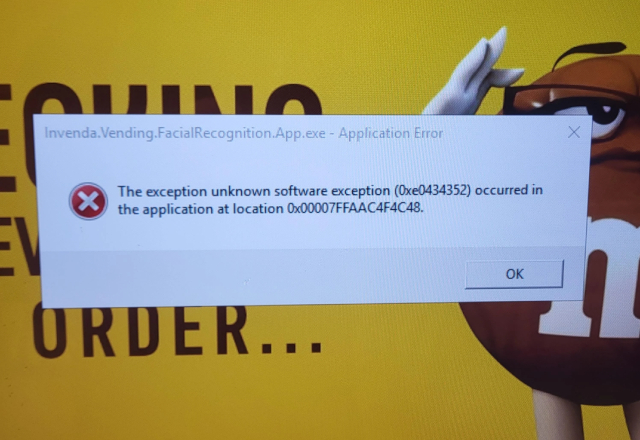

The error message that blew Ivenda’s cover: Waterloo’s digitally

The error message that blew Ivenda’s cover: Waterloo’s digitally

savvy students were quick to realize what it implied…

Invasive AI strikes again

The University of Waterloo was in the forefront of computer and programming technology photo at the dawn of it all – back in the 1960s. And it’s still a widely respected set of digital development. So it’s ironic that Waterloo was the scene of a scandal recently, involving clandestine spy technology.

It seems a vending company was given the contract to place 29 Invenda ‘smart’ food machines around the campus, selling M&Ms. The machines were attractive, sleek and state-of-the-art, featuring touch screen menu and selection displays.

But they also harboured a sinister secret: The Invenda machines included cameras connected to a facial recognition system. The secret capabilities of the machines blew their own cover when at least one flashed a Windows system message reporting an ‘Invenda Facial Recognition App Error’. Students were appalled. They immediately complained to the administration.

“The natural question that follows there is, ‘Why does it have a facial recognition app? How can this error even exist?'” said River Stanley, a fourth-year computer science and business student who broke the story in the campus journal mathNEWS.

What it does

It has since come to light that the Invenda AI app in question is designed to perform ‘facial analysis’, not ‘facial recognition’. And Invenda says there’s a crucial difference. “People detection solely identifies the presence of individuals whereas, facial recognition goes further to discern and specify individual persons,” the company claimed, in a statement said.

Invenda says its system is intended to gather basic customer information such as gender and age. Those data points are then analysed to determine the best mix of products to offer in each machine, based on who’s using it.

But that doesn’t explain why Invenda used the term ‘facial recognition’ in a 2020 promotional video.

Makes business sense

Sharon Polsky, President of the Privacy and Access Council of Canada, based in Calgary, says, “From a business perspective, [the strategy] absolutely makes sense.” Plotsky told CBC News: she’d like to see an investigation into the Invenda machines,” presumably to confirm that they are not infringing individuals’ privacy rights, “and stricter privacy legislation overall.

“It’s terrific that people are noticing these affronts to our privacy,” she noted. “And not just shrugging [their] shoulders and saying ‘Not a big deal’.”

An invasion of privacy?

The question remains: Is the Invenda system violating Canada’s privacy laws? Or, by not retaining specific information on specific individuals, is it operating ‘just within the law’? That one could take a lot of time and court deliberation to answer.

Among the privacy issues raised by Waterloo students is the fact that the machines don’t disclose, in any way, that facial data is being gathered. Not even a symbol to indicate that a camera is present.

My take

Whether this facial analysis software is illegally invasive or not remains up for debate. But I don’t like it. The assumption these days, among tech companies, seems to be that consumers love tech and will go along like sheep to a shearing with whatever they offer. What I really detest is the clandestine manner in which Invenda deployed it. That just indicates that the company knew – or at least, feared – consumer backlash if the truth were known. But they went ahead with the tech, anyway.

Big Brother is literally watching! And at least one expert in the field predicts the tech will persist in our daily environments. In some form or another. Regardless of what the courts may eventually decide. “The genie is kind of out of the bottle here,” warns Doug Stephens, founder of the Retail Prophet. “I don’t see this [technology] as being something that’s simply going to go away.”

*SIGH*

~ Maggie J.